Editorial note: A version of this blog post was previously published in the JISC IE Technical Foundations blog.

At this summer's Institutional Web Management Workshop in Sheffield (IWMW 2010), I demonstrated how it is becoming feasible for a content management system both to consume and to produce linked data resources. In a parallel session, I presented an overview of the current state of play in 'Semantic content management: consuming and producing RDF in Drupal'. In a video-recorded plenary session (specifically in a nine-minute segment of the recording, from 34 through 42 minutes), I briefly reviewed how a modern CMS can enrich local datasets with remote linked datasets-- and, by engaging with the web of data, produce new insights. Here I explain the scope of what I demonstrated at this event, outline some practical implementation procedures, and evaluate initial results.

Scope: interim check on long sojourn towards promised land

The scope of my demonstration was limited: quickly testing current feasibility of consuming and producing linked data sets in a real-world context. Using recent developments in content management technology, work on this demo was designed to check:

- how easily local datasets can be combined and enriched with remote datasets

- how effectively a content management system can engage with linked data to provide new insights

- how close are we to that promised land where linked data technology can provide real benefits to a broad range of websites

Choosing a context immediately relevant to participants in this year's Institutional Web Management Workshop, I decided to build a 'proof of concept' website providing a synoptic view of institutions and speakers participating over many years in IWMW events. From IWMW organisers I understood that quite a lot of data related to these events was already available, in discrete forms. Event information going back more than 10 years was accessible via many separate IWMW RSS feeds and web pages. Other datasets of interest, however, languished in office spreadsheets (until now, buried within the classic 'information silo'). With so many sources of data available, the challenge was to find a way of presenting disparate sets of information in a unified, manageable, understandable way. Having read with interest the explanations and arguments in "Exploiting Linked Data For Building Web Applications" by Michael Hausenblas (2009), my objective was to check how linked data technologies can have practical applications within a real-world website:

Semantic Web technologies are around now for a while, already. However, in the development of real-world Web applications these technologies have ... little impact to date. With linked data this situation has changed dramatically in the past couple of months. This article shows how linked datasets can be exploited to build rich Web applications with little effort.

Hausenblas observes that "in contrast to the full-fledged Semantic Web vision ... linked data is mainly about publishing structured data in RDF using URIs rather than focusing on the ontological level or inferencing":

This simplification -- just as the Web simplified the established academic approaches of Hypertext systems -- lowers the entry barrier for data provider, hence fosters a wide-spread adoption.

This 'simplicity wins' argument rings true with regard to many technology development patterns, and with human nature. Personally, it strongly reminds me of what I noticed during early days of the web. During the mid-1990s I could well understand how SGML adherents disliked the relatively gross simplifications of HTML and its growing preoccupation with presentation and formatting rather than semantic structure. Nevertheless, it did seem clear then, as now, that simplification and widespread adoption were highly correlated. Is it really becoming simpler, as Hausenblas and others recently claim, so that "linked datasets can be exploited to build rich Web applications with little effort", thanks to advances in content management systems? This summer, as I worked on a prototype website for my IWMW presentation, I remembered how long the journey has been to the long-anticipated 'semantic web' promised land. In Tim Berners-Lee's first recorded proposal for the World Wide Web, as drafted in March 1989 and then revised in 1990, there are remarkable indications of 'semantic web' notation — aligned with much later development of RDF (as noted by Dan Brickley in 'Semantic Web History: Nodes and Arcs 1989-1999').  Looking back again this summer, I noticed how 20 years elapsed since this first proposal did seem — in comparison to normally fast-paced 'internet time' — very much like 40 years of wandering in the desert. I wondered if the 'semantic web' promised land, flowing with linked data, was at last in sight? With its core integration of a robust RDF API and its much-heralded functionality to produce and consume linked data, forthcoming Drupal 7 promised, after two years of active planning and development, to bring linked data technologies into a widely used content management system:

Looking back again this summer, I noticed how 20 years elapsed since this first proposal did seem — in comparison to normally fast-paced 'internet time' — very much like 40 years of wandering in the desert. I wondered if the 'semantic web' promised land, flowing with linked data, was at last in sight? With its core integration of a robust RDF API and its much-heralded functionality to produce and consume linked data, forthcoming Drupal 7 promised, after two years of active planning and development, to bring linked data technologies into a widely used content management system:

While it is worthwhile to mention that the first of these [content management] systems appeared at around the same time as Semantic Web technologies emerged, with RDF being standardized in 1999, the development of CMSs and Semantic Web technologies have gone largely separate paths. Semantic Web technologies have matured to the point where they are increasingly being deployed on the Web. But the HTML Web still dwarfs this emerging Web of Data and — boosted by technologies such as CMSs — is still growing at much faster pace than the Semantic Web.... Approaching site administrators of widely used CMSs with easy-to-use tools to enhance their site with Linked Data will not only be to their benefit, but also significantly boost the Web of Data. (Corlosquet, Delbru, Clark, Polleres, Decker, 2009)

In designing a prototype website for the IWMW event, I specifically wanted to evaluate:

- Beyond the handful of apps and websites described by Hausenblas as exemplary integrations of linked data (Faviki, DBpedia Mobile, BBC Music, Musicbrainz), how easy would exploiting linked data resources be for a broad range of websites managed by an open source content management system?

- How faithfully can a modern CMS implement best-practice guidelines for exploiting linked data, such as those explained by Hausenblas?

- Where some guidelines cannot yet be implemented, are practical benefits achievable?

Implementation procedures: from hypothetical to actual

Hausenblas describes how key linked data principles could be applied in building a hypothetical website:

imagine a historical ... website http://example.org/cw/ that deals with the topic 'Cold War' ... [and] assume the site is powered by a popular software such as Wordpress or Drupal. (Hausenblas, 2009)

Whereas Hausenblas bases explanations on hypotheticals, I wanted to evaluate more closely what can actually be achieved in building a website that exploits linked data resources, using a specific, currently available CMS. Given the buzz of anticipation for the forthcoming release of version 7 with core RDF integration, I chose Drupal as best choice for a feasibility test. Hausenblas explains, at high level, two "steps needed for exploiting linked datasets in an exemplary Web application":

In order to exploit linked dataset[s] properly, basically two steps are required: (i) prepare your own data, and (ii) select appropriate target datasets.

Preparing local data

As explained in my post on the prototype website entitled 'Consuming and producing RDF: current arrangements', my first stage of work concentrated on local datasets:

- extracting available data from IWMW registration details kept in office spreadsheets

- compiling event information (session abstracts and speaker bios) from RSS feeds on IWMW website

- cross-checking IWMW web pages for detailed information about sessions and speaker affiliations

During this first stage of local data extraction and compilation, I used perl scripts to create relevant datasets. Overall, this first stage of work required more time and effort than the next stage. Because it needed ad hoc data-munging scripts, this work on local data ultimately proved more tedious than the more routine retrieval of linked data resources in stage two.

Selecting linked data resources

Once these local data sets were extracted and compiled for IWMW speakers and their affiliations, it became clear how DBpedia could supply quite a lot of useful linked data. During this second stage of work on the prototype, I used a combination of perl scripts to retrieve and process RDF triples (including textual descriptions, statistics, geolocation coordinates, etc) from DBpedia and then Drupal utility modules ('Feeds' and 'Taxonomy CSV') to batch-load this data into relevant segments of the prototype 'IWMW synoptic' website.

Note: Forthcoming modules such as 'SPARQL views', as explained by Lin Clark in a project proposal and video, are designed to enable "average users to integrate SPARQL into their website workflow" without need for external scripts. As I worked this summer on retrieving and integrating linked data into a demo website, however, this facility was missing in both Drupal 6 or Drupal 7 alphas.

Beyond these datasets from DBpedia, a range of further resources could be integrated given more time and scope to engage with the Web of Data:

- filtered datasets retrieved from SPARQL queries on DBpedia (as illustrated by Martin Poulter in his follow-up blog post 'Getting information about UK HE from Wikipedia')

- tags coordinated with Open Calais, via Faviki (correlated with DBpedia), or (more recently) via managed-thesaurus-tag-recommendation service such as PoolParty using 'SKOS thesauri enriched with Linked Data'

Initial results, trends, and directions of travel

Even with the limited scope and time available for working on the 'IWMW synoptic' demo website, it was possible to produce quite a lot of initial results. Here are some links to views of local datasets enriched with linked data:

- sortable table of participating organisations, compared with distance from event (exportable in .doc and .csv formats)

- filterable and sortable table of participating organisations, compared with student numbers (exportable in .doc and .csv formats)

- interactive map of organisations contributing speakers to IWMW (clickable pop-ups to display enriched data sets)

- SPARQL endpoint producing RDF in wide range of formats (XML, JSON, Turtle etc)

- SPARQL query form, available for queries on local and remote endpoints

- selective summary of speakers bios, abstracts (note: complete dataset not yet loaded into prototype website)

- overview of IWMW speaker affiliations (screenshot below)

How easy?

Short answer: CMS arrangements do make it remarkably easy to present local data enriched with linked data, accessible in both human-usable and machine-readable views. In the currently transitional state of Drupal development (as explained in 'Semantic content management: consuming and producing RDF in Drupal'), however, this requires quite a bit of ad hoc preparation. This summer, I needed to write custom scripts both for preparing local data and for retrieving linked data. This latter process of retrieving linked data should become easier when utility modules such as 'SPARQL views' and others become available following official release of Drupal 7. Only after a full complement of RDF modules becomes available following an official release of Drupal 7 can the optimistic vision of CMS advocates be justified:

Again, the [website] operator is in a comfortable position: for his system plug-ins exist allowing to expose data with just a few configuration changes. (Hausenblas, 2009)

My experience this summer proves that it takes more than just 'a few configuration changes' before a CMS manager can start consuming and producing linked data robustly. Such a 'comfortable position' is not yet quite a reality.

How faithful?

Hausenblas discusses three best-practice guidelines for making a content management system "Web-of-Data compliant":

- re-using relevant ontologies and vocabularies (such as FOAF)

- exposing linked data as RDF/XML, RDFa, or in SPARQL endpoints

- minting URIs along the lines used by DBpedia (where machine-readable (RDF) and human-usable (HTML) versions are distinguished within URI spaces /resource and /html paths, ideally accessible via automated content negotiation)

Guideline 1: Re-using common vocabularies

Regarding the first guideline, I found that Drupal 6 RDF modules available this summer do facilitate re-use of commonly used vocabularies such as FOAF (and many others). In fact, just a few configuration changes were required for the demo site to output RDF such as this (abridged) excerpt:

Guideline 2: Exposing linked data in various formats

With regard to this second guideline for exposing linked data as RDF/XML, RDFa, or as query output from a SPARQL endpoint, I found that:

- Drupal 6 RDF modules can easily export a range of linked data in RDF/XML format. (Upon official release of Drupal 7, there will be 'out of the box' support for RDFa output.)

- It was easy to set up a SPARQL endpoint with just a few configuration changes, so that it could respond (in a very wide range of formats) to queries on triples compiled automatically (via cron runs) from website content.

As a result of the transitional state of module development pending final release of Drupal 7, however, I found that RDF/XML output included eccentric ('site') vocabulary tags. In effect this produced redundant noise in RDF which, albeit distracting to the human eye, could be safely ignored by machine-read processes keyed to a standard vocabulary such as FOAF.

Guideline 3: Mint machine-readable and human-usable URIs

Regarding this third guideline, I found that current state of development in Drupal RDF modules could not support an ideal arrangement for automated content negotiation as implemented by DBpedia. Drupal 6 RDF modules do, however, support parallel RDF and HTML output using URI schema such as:

- http://iwmw-rdf.ukoln.info/node/48/rdf (person profile in RDF format)

- http://iwmw-rdf.ukoln.info/node/48/ (person profile in HTML format)

Not ideal yet reasonably practical.

The future?

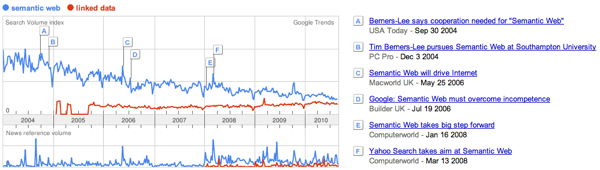

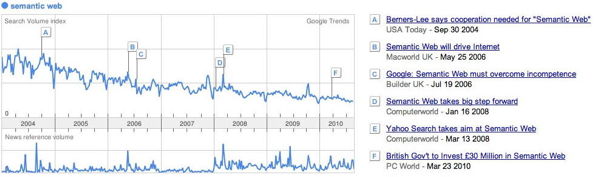

If Hausenblas, Scorloquet and others are correct about prospects for CMS developments boosting the adoption of linked data technologies, this can dramatically broaden the numbers and types of websites engaged with the Web of Data. Probably more than 7 million websites were using Drupal in July 2010 (including many large, high-traffic and high-profile websites in commercial, governmental, and academic contexts). As more websites transition into using new Drupal 7, this can sharply increase the numbers of websites consuming and producing linked data. Is this the future as illustrated the DrupalCon Boston 2008 keynote presentation 'Video from the future'? That keynote, which announced the start of work on integrating RDF into Drupal core, illustrated some interesting RDF 'web of data' mashups. The current focus is on increasing take-up. As illustrated by Google Trends, levels of interest in 'semantic web' technologies (as reflected in search volumes) decline steadily from 2004 to 2010.

By contrast, Google Trends indicate that search volume levels for 'linked data' are gradually rising.  At this point, is active interest in 'linked data' overtaking long-established interest in the 'semantic web'? If Drupal's integration of RDF into its core functionality can help dramatically expand the number of websites engaging with linked data, this is good news for tribes on a long sojourn towards a promised land.

At this point, is active interest in 'linked data' overtaking long-established interest in the 'semantic web'? If Drupal's integration of RDF into its core functionality can help dramatically expand the number of websites engaging with linked data, this is good news for tribes on a long sojourn towards a promised land.

References

Michael Hausenblas, "Exploiting Linked Data to Build Web Applications," IEEE Internet Computing, vol. 13, no. 4, pp. 68-73, July/Aug. 2009, doi:10.1109/MIC.2009.79. Stéphane Corlosquet, Renaud Delbru, Tim Clark, Axel Polleres, Stefan Decker, "Produce and Consume Linked Data with Drupal!", Proceedings of the 8th International Semantic Web Conference (ISWC 2009), Springer, 2009, doi: 10.1007/978-3-642-04930-9_48.

Comments

Nice one Thom. Excellent

Nice one Thom. Excellent piece of work. Excellent choice of examples too :) Ade

[...] has written a post on

[...] has written a post on Consuming and producing linked data in a content management system in which he describes the technical details of how he used Drupal to produce his Linked data [...]

http://ukwebfocus.wordpress.com/2010/09/21/linked-data-for-events-the-iw...

Linked Data for Events: the IWMW Case Study « UK Web Focus

[...] The typical CMS is

[...] The typical CMS is geared towards building Web pages. All modern CMS systems allow content to be managed in ‘chunks’ smaller than a whole page, such that content such as common headers, sidebars etc. can be re-used across many pages. Nonetheless, the average CMS is ultimately designed to produce Web resources which we would recognise as ‘pages’. An HEI’s web team will continue to be concerned with the site in terms of pages for human consumption. However, simply by exposing the smaller chunks of information, in machine-readable ways, the CMS can become a platform for engaging with the Web of data. My colleague Thom Bunting describes such possibilities having experimented with one popular CMS, Drupal, in Consuming and producing linked data in a content management system. [...]

http://blog.paulwalk.net/2010/09/21/institutions-and-the-web-done-better/

Institutions and the Web done better | paul walk's weblog

Thanks, Benjamin, I agree

Thanks, Benjamin, I agree that UI developments are now key to making Linked Data more useful for 'ordinary' site maintainers. In following Drupal's community discussions re development of Drupal 7 core and contributed RDF modules, I am impressed with the range and depth of work. It will be even better, however, as more developers start to shift their focus onto making user interfaces easier to work with -- and this enables typical website managers to integrate linked data resources more readily into their websites. As mentioned in my blog post, Lin Clark's development of 'SPARQL Views' looks like a very good example of modules moving in that direction. Clearly the Drupal community is trying to make it possible for their codebase to support a very broad range of use cases -- and its growing numbers of distributions (including 'Open Publish', with some key semantic tools) are significantly helping in domain-specific contexts. As soon as possible, I would like to see available some distribution(s) packaging up the new 'semantic web' developments introduced with Drupal 7. Timing is, as always, a big factor. A strong, stable release of RDF functionality in Drupal 7 looks like it can help build real, broad-based momentum in using linked data. Even now, however, it remains a bit unclear exactly when the promised range of Drupal 7 RDF functionality (especially in 'contributed' modules) will become a working reality for many websites. Earlier this week, at the London ISKO event 'Linked Data: The Future of Knowledge Organization on the Web', I noticed that many speakers considered now a crucial opportunity for engagement with linked data. If developers can provide and package up within content management systems such as Drupal adaptable user interfaces based on robust RDF APIs, this surely can help more websites engage effectively with ever-growing 'hubs' of linked data such as DBpedia. Eventually, I hope this can also help more websites harness the power of 'open data' streams driven by governmental mandates (such as, here in the UK, those starting to flood into data.gov.uk as displayed in its data.gov.uk RSS feed). In any event, getting Drupal 7 released soon will be a good starting point.

Thank you for sharing your

Thank you for sharing your experience. That's a very helpful article for CMS developers/enhancers. I think the systems are getting pretty far in terms of generic features (import data, export in x formats, provide an API, etc), but facilitating the realization of domain-specific (and non-anticipated) use cases still needs more work. Especially the UI-related stuff, now that Linked Data allows more powerful features to bubble up to the site maintainers, who may not be developers.

[...] Consuming and producing

[...] Consuming and producing linked data in a content management system [...]

http://blogs.ukoln.ac.uk/newsletter/2010/10/newsletter-for-september-2010/

Newsletter for September 2010 « UKOLN Update

Great work Thom, I’ll be

Great work Thom, I’ll be citing this in our upcoming #INF11 Call on ‘Identifiers’.